Data loss is expensive and common. In 2022, 26% of businesses experienced data loss, with recovery costs averaging $4.88 million. Ransomware attacks nearly doubled between 2022 and 2023, affecting 59% of organizations. A reliable VPS backup strategy is your best defense.

Use True Backups, Not Just Replication: Replication mirrors changes instantly, including errors. Backups allow point-in-time recovery and file versioning.

- Choose the Right Backup Type:

- Online Backups: No downtime, but resource-intensive.

- Offline Backups: Reliable snapshots but require downtime.

- Full Backups: Capture everything; ideal for weekly schedules.

- Incremental Backups: Save only changes; perfect for daily updates.

- File-Level vs. System Image:

- File-level is faster and uses less storage.

- System images are better for full system recovery.

- Follow the 3-2-1 Rule: Keep 3 copies of data, on 2 types of media, with 1 copy offsite.

Here’s how to create a solid backup plan:

- Follow the 3-2-1-1-0 Rule:

- 3 backup copies

- 2 storage media types

- 1 off-site copy

- 1 offline/air-gapped copy

- 0 errors during testing

- Prioritize Key Data: Protect databases, system files, and critical configurations.

- Set Recovery Goals: Define Recovery Time Objectives (RTO) for different systems (e.g., 15 min for payment systems).

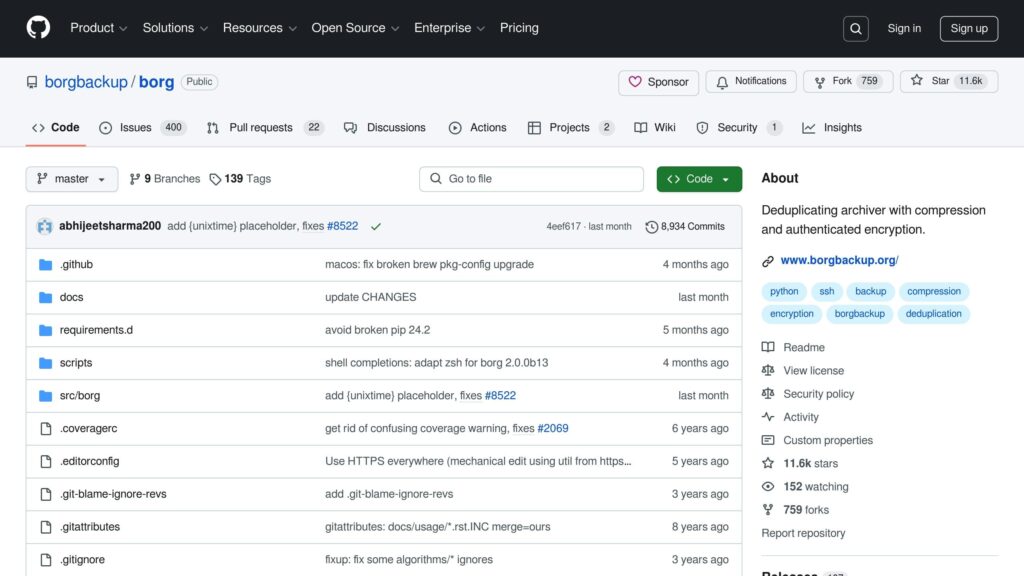

- Choose Backup Tools: Use Rsync for file syncing or BorgBackup for encryption and deduplication.

- Automate Backups: Schedule backups with Cron jobs based on data change rates and recovery needs.

- Encrypt and Test: Secure backups with AES-256 encryption and regularly test recovery processes.

- Store Off-Site: Use cloud storage or remote servers for disaster recovery.

Quick Comparison of Backup Type

| Backup Type | Pros | Cons |

|---|---|---|

| Online | No downtime, frequent backups | Higher resource usage |

| Offline | Consistent, reliable | Requires downtime |

| File-Level | Faster, less storage needed | Limited to specific files |

| System Image | Full recovery possible | Slower, more storage needed |

To start, assess your data needs, choose the right methods, and schedule backups during low-traffic times. Secure and monitor your backups regularly to ensure data integrity and recovery readiness.

Quick Comparison of Backup Tools:

| Feature | Rsync | BorgBackup |

|---|---|---|

| Efficiency | Basic file copying | Advanced deduplication |

| Compression | SSH compression | Multiple options (lz4, etc.) |

| Security | SSH tunnel encryption | AES-256 encryption |

| Backup Versions | Single copy | Multiple archival backups |

Building a Better Backup Strategy

Plan Your Backup Needs

When setting up a VPS backup, start by understanding your data and how quickly you’ll need to recover it if something goes wrong.

Identify Your Most Important Data and Systems

Make a list of the critical data and systems that your business can’t afford to lose.

“Consider what data you store on your server and whether that data is available elsewhere. For example, if you run an online store, your customer details and order/stock level data is considered critical. Product images and descriptions pulled from a supplier’s API may be less important.” [1]

Focus on these key areas:

- Databases: Information like customer details, transactions, and inventory

- Custom configurations: Server settings, app configurations, and security rules

- User content: Things like comments, reviews, and uploaded media

- System files: Operating system files and installed software

Decide How Quickly You Need Recovery

Your Recovery Time Objective (RTO) is the amount of time you can afford to be offline before it starts causing serious problems.

| Priority Level | Recovery Time | Examples |

|---|---|---|

| Tier 1/Gold | 15 min – 1 hr | Payment systems, customer databases |

| Tier 2/Silver | 1 hr – 4 hr | Content management, email servers |

| Tier 3/Bronze | 4 hr – 24 hr | Development tools, archives |

When setting your RTO, think about:

- Revenue impact: How much money could you lose during downtime?

- Customer agreements: Are there SLAs that require fast recovery?

- System dependencies: Which systems rely on each other to work?

- Recovery costs: Faster recovery often costs more – find the right balance.

Figure Out How Much Backup Space You Need

- Check your current data size.

- Plan for 20-30% growth over time.

- Set up retention rules, such as:

- Daily incremental backups

- Weekly full backups

- Monthly archives

- Yearly snapshots

You’ll also need extra storage if you use GFS (Grandfather-Father-Son) retention, which keeps multiple full backups for different time periods.

Keep an eye on the size of your backups and adjust your storage as your data grows. This helps avoid failed backups and makes sure you’re using your resources wisely.

Once you’ve outlined your backup needs, you can move on to choosing the right tools for the job.

Select Backup Software

Two popular tools for VPS backups are Rsync and BorgBackup.

Common VPS Backup Tools

Rsync and BorgBackup serve different purposes, depending on your backup requirements. Here’s a quick comparison:

| Feature | Rsync | BorgBackup |

|---|---|---|

| Efficiency | Basic file copying | Advanced deduplication |

| Compression | SSH compression | Multiple options (lz4, zstd, zlib, lzma) |

| Security | SSH tunnel encryption | AES-256 encryption with HMAC verification |

| Backup Versions | Single backup copy | Supports multiple archival backups |

Using Rsync for Files

Rsync is a great choice for file synchronization. It only transfers the parts of files that have changed since the last backup, saving bandwidth and time.

To install Rsync:

- On Debian/Ubuntu:

sudo apt-get install rsync - On Fedora/CentOS:

sudo yum install rsync

Basic backup command:

rsync -av source/ destination/

Remote backup command:

rsync -av -e ssh /local/path username@remote-vps:/backup/path

If you need stronger encryption and better compression options, BorgBackup might be a better fit.

Using BorgBackup for Security

BorgBackup is designed for secure and efficient backups, especially in environments with frequent updates. It supports advanced deduplication, AES-256 encryption, and various compression algorithms.

Setting up BorgBackup:

- Initialize an encrypted repository:

borg init -e repokey /path/to/repo - Create your first backup archive:

borg create /path/to/repo::Saturday1 ~/Documents

BorgBackup is particularly useful for VPS setups that require regular updates, offering both strong encryption and efficient data management.

Set Up Automatic Backups

Schedule with Cron Jobs

Using Cron jobs is a straightforward way to automate VPS backups.

To get started, edit your Cron configuration:

crontab -e

Here are some examples of Cron schedules for different backup types:

| Backup Type | Cron Syntax | Description |

|---|---|---|

| Daily Full | 0 3 * * * /usr/local/bin/backup.sh | Runs at 3:00 AM every day |

| Weekly System | 0 0 * * 0 /usr/local/bin/system_backup.sh | Runs every Sunday at midnight |

| Frequent Data | */15 * * * * /usr/bin/backup.sh | Runs every 15 minutes |

“Cron does one thing, and does it well: it runs commands on a schedule.” – Victoria Drake, freeCodeCamp News [4]

Decide on a backup schedule that aligns with your specific operational needs.

Pick Backup Intervals

Your backup interval should match how often your data changes and how quickly you need to recover it. Here are some key points to consider:

- Data Change Rate: If your databases are updated often, schedule backups more frequently. Static content requires less frequent backups.

- Off-Peak Scheduling: Run resource-heavy backups during times of low activity to minimize disruptions.

- Recovery Needs: Critical systems might need hourly backups, while less essential data can be backed up weekly.

- Storage Limitations: Ensure your backup destination has enough space to handle your chosen backup frequency.

For high-priority data, you can create a multi-tier backup schedule like this:

0 * * * * /usr/bin/rsync -az /critical/data /backup/hourly 0 2 * * * /usr/local/bin/backup.sh --full

After setting up your schedule, focus on managing the impact of backups on your server’s performance.

To minimize performance issues, schedule backups during times of low system activity. Here’s a suggested framework:

| Backup Type | Timing | Retention Period |

|---|---|---|

| Full System | Weekly (Sunday, 2 AM) | 2 copies |

| Database | Daily (3 AM) | 7 days |

| Configuration | Weekly | 4 copies |

| User Files | Daily (4 AM) | 14 days |

Always schedule a backup before making significant changes to your site [5].

Manage Server Load

To avoid overloading your server during backups, follow these tips:

- Throttling: Limit resource usage. For example, with

rsync, you can use--bwlimit=1000to cap bandwidth. - Compression: Use compression to reduce the size of backups without adding extra commands.

- Monitor Resources: Keep an eye on key metrics to ensure your server stays stable:

- CPU usage

- Disk I/O

- Network bandwidth

- Available storage space

Balancing backup frequency and server performance is crucial to maintaining smooth operations./banner/inline/?id=sbb-itb-0ad7fa2

Protect Backup Files

Set Up Encryption

Use AES-256 encryption to secure your VPS backups during both transfer and storage.

# Initialize an encrypted repository

borg init --encryption=repokey-blake2 /path/to/repo

# Create an encrypted backup with compression

borg create --compression lz4 /path/to/repo::backup-{now} /data

To enhance security, store encryption keys separately from the backups. For added protection, consider using a hardware security module (HSM) [5].

Control Backup Access

Limit access to your backups by enforcing strict file permissions. Stick to the principle of least privilege to minimize risks:

# Set restrictive permissions on the backup directory chmod 700 /backup chown backup-admin:backup-admin /backup # Configure specific user access setfacl -m u:backup-user:rx /backup/specific-folder

Set up dedicated user accounts solely for backup purposes, granting them only the permissions they need. Regularly monitor access attempts, and keep detailed logs of all backup operations to identify any unauthorized activities.

By combining encryption with controlled access, your backups will stay secure and ready for recovery when needed.

Check Backup Quality

Surprisingly, only 5.26% of organizations test their backups each quarter [6], leaving most data unverified and potentially unusable.

Follow these steps to verify your backups:

| Verification Task | Frequency | Purpose |

|---|---|---|

| Log Review | Daily | Ensure backups completed without errors |

| Checksum Validation | Weekly | Confirm file integrity |

| Recovery Test | Monthly | Test if data can be restored |

| Full System Recovery | Quarterly | Validate the entire backup process |

Use checksums to confirm backup file integrity:

# Generate a checksum for the backup file sha256sum backup.tar.gz > backup.checksum # Verify backup integrity sha256sum -c backup.checksum

Once you’ve verified that your backups are intact and accessible, move them to secure off-site storage for added safety.

Store Backups Off-Site

Once your backup files are secure, keep them off-site to protect against any issues at your primary location.

Multiple Location Storage

Follow the 3-2-1 rule: keep three copies of your data, use two different types of storage media, and store one copy off-site. This method reduces the risk of losing data to physical disasters, hardware malfunctions, or cyberattacks.

Spread your backups across different locations:

| Storage Type | Purpose | Recommended Update Frequency |

|---|---|---|

| Primary Backup | Quick local recovery | Daily |

| Secondary Backup | On-site redundancy | Weekly |

| Off-site Copy | Disaster recovery | Weekly or Monthly |

Add Cloud Storage

To simplify off-site storage, sync backups automatically with a cloud provider:

# Set up rclone configuration for cloud storage rclone config # Create automated cloud backup script rclone sync /backup/files remote:backup-folder \ --progress \ --tpslimit 10 \ --transfers 8

For extra security, enable immutable storage to prevent tampering:

# Example AWS S3 bucket policy for immutable storage

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Principal": "*",

"Action": ["s3:DeleteObject", "s3:PutObject"],

"Resource": "arn:aws:s3:::your-bucket/*"

}

]

}

With cloud storage in place, ensure secure data transfers to remote servers.

Transfer to Remote Servers

Set up an SSH key with strict limitations for backup operations:

# Configure SSH key with restricted commands command="rsync --server --sender -vlogDtpr . /backup/",no-port-forwarding,no-X11-forwarding,no-agent-forwarding,no-pty ssh-rsa AAAAB3Nz...

Use append-only repositories to maintain backup integrity:

# Create append-only backup repository borg init --append-only /path/to/backup # Configure remote repository borg init --append-only ssh://backup-server/path/to/backup

“While my focus has been primarily on digital media, the 3-2-1 principles are pretty universal. In fact, the ‘rule’ itself was simply a synopsis of the practices that I found among IT professionals, as I was writing my first book. I just gave it a catchy name.” – Peter Krogh [8]

Strengthen your setup further with Identity Access Management (IAM). Assign upload-only permissions for backup processes, enforce multi-factor authentication for deletions, and limit access to reduce risks of accidental or malicious data loss [7].

Test Your Backup System

Regular testing ensures your backup system is reliable. On average, organizations test their backups only every eight days [6], highlighting the need for more frequent and thorough recovery testing. Start by checking the integrity of recovered data, then move on to simulating full system failures.

Test Data Recovery

Review backup logs for any errors or warnings. Automated monitoring can help track key metrics:

| Test Type | Frequency | Metrics |

|---|---|---|

| File Integrity | Daily | Checksum verification, file count |

| Mount Testing | Weekly | Access speed, file system errors |

| Full Recovery | Monthly | Recovery time, data completeness |

| Storage Health | Quarterly | Disk health, space utilization |

Automating log reviews and verifying file counts with checksums before and after backups can save time. Perform mount tests to catch file system issues early. Tools like Vorta allow you to browse backed-up files and confirm their integrity [9].

Run Recovery Drills

Prepare for real-world scenarios by testing these recovery situations:

1. Hardware Failure Simulation

Measure recovery time and identify any missing dependencies [12].

2. Ransomware Recovery

Practice restoring from encrypted backup snapshots to ensure your backups are resistant to ransomware. Test both incremental and full restores [12].

3. Cross-Region Recovery

Check your ability to restore services in different geographical areas. This step ensures your disaster recovery plan works across multiple data centers [11].

Update Backup Methods

“Realistic backup testing is an indispensable part of a robust data protection strategy.” [12]

Track metrics like recovery time, success rates, data integrity, and system performance. Document test results and adjust your backup procedures based on the findings. Keeping your backup software up-to-date is crucial for maintaining its reliability and security [10].

“Untested backups are like Schrödinger’s cat. Nobody knows they’re dead or alive.”

Use automated scripts to regularly check backup integrity and maintain detailed logs of all recovery attempts. This proactive approach can help you catch problems before they affect your live environment.

Wrapping It Up

Having a solid VPS backup plan is key to keeping your data safe. Regularly testing and updating your backups ensures your data stays intact and your business can keep running smoothly. Skipping these tests could lead to failed recoveries when you need them most[1].

A good backup plan focuses on a few important elements. For instance, combining full backups with incremental updates helps reduce risks and makes recovery easier[3][13].

Here are three key factors that make a backup system reliable:

- Multiple Storage Locations: Storing backups in different physical locations adds redundancy and protects against large-scale disasters[13].

- Automated Testing: Regularly testing your backups by restoring them confirms they work and that your data is recoverable[14].

- Documentation: Clear, detailed recovery procedures make it easier to restore your data quickly when time is of the essence[2].

These steps work together to ensure you can recover your data when it matters most.