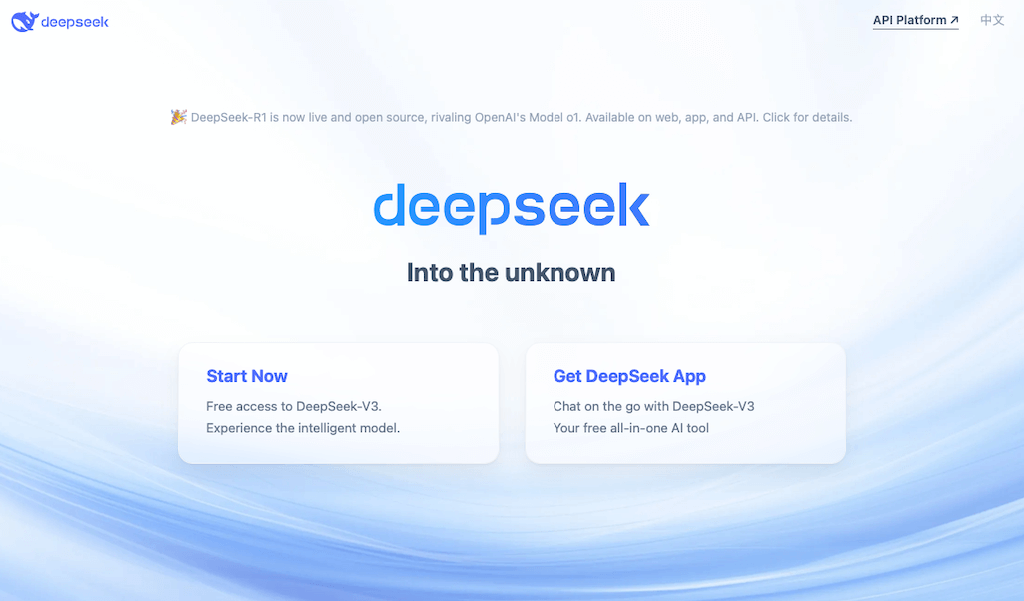

Want to run advanced AI tools on a VPS? Here’s a quick guide to set up DeepSeek (a 70B parameter NLP model) and Ollama (for easy AI deployment) on a VPS. This setup is ideal for enterprises, researchers, and startups needing reliable, scalable AI solutions.

Key Steps

- VPS Requirements: At least 8 GB RAM, 4 vCPUs, and 12GB storage (NVMe SSD recommended for speed).

- System Setup: Update your OS (Ubuntu 24.04 or CentOS 8+), secure your VPS with a firewall, and optimize performance with swap space and TCP tweaks.

- Install Ollama: Use a simple script to install Ollama for managing LLMs.

- Deploy DeepSeek: Download the 70B model and run it as a systemd service for continuous operation.

Benefits:

- Faster Performance: Up to 40% faster inference on server-grade hardware.

- Scalability: Handle peak demands with ease.

- Reliability: 99.95% uptime and NVMe storage for quick model loading.

Follow this guide to transform your VPS into a powerful AI platform.

How to Install and Run DeepSeek Using Ollama on Your VPS

VPS Setup Requirements

System Requirements

To run DeepSeek efficiently, your VPS needs specific hardware configurations. The minimum and recommended specifications are:

| Component | Minimum Requirement | Recommended |

|---|---|---|

| RAM | 8 GB | 32GB |

| CPU Cores | 4 vCPU | 8 vCPU |

| Storage | 12GB available space | 200GB NVMe SSD |

| Network | 1 Gbps (Unmetered recommended) |

You’ll also need to use either Ubuntu 24.04 LTS or CentOS 8+ as your operating system. These options provide a solid foundation for AI workloads, offering stability and regular security updates [3].

Choosing Your VPS

When picking a VPS provider, prioritize those offering configurations tailored for AI workloads. For instance, VPS.us offers LLM Hosting featuring plans such as the KVM8-US plan at $80/month, which includes 4 vCPU cores and NVMe storage, meeting the minimum requirements. If you’re setting up for production, consider these key factors:

- Network Performance: Opt for providers with stable and fast connectivity.

- Storage Type: NVMe SSD storage is ideal for faster read/write speeds.

- Scalability: Ensure the provider supports easy resource upgrades.

- Geographic Location: Select data centers close to your primary users to reduce latency.

Basic VPS Setup Steps

Follow these steps to configure your VPS for optimal performance and security:

1. System Updates and Security

Start by updating your system and installing basic security tools:

sudo apt update && sudo apt upgrade -y sudo apt install ufw fail2ban

2. Network Security Configuration

Set up your firewall to allow essential ports:

sudo ufw allow OpenSSH sudo ufw allow 80/tcp sudo ufw allow 443/tcp sudo ufw allow 8080/tcp sudo ufw enable

3. Performance Optimization

Enhance performance by creating swap space and tweaking network settings:

sudo fallocate -l 4G /swapfile sudo chmod 600 /swapfile sudo mkswap /swapfile sudo swapon /swapfile

For better network efficiency, adjust TCP settings:

sudo sysctl -w net.ipv4.tcp_congestion_control=bbr sudo sysctl -w net.core.somaxconn=1024

These steps ensure your VPS is secure and optimized for AI workloads [1][3]. With this setup complete, you’re ready to install Ollama and deploy DeepSeek.

sbb-itb-0ad7fa2

Installing DeepSeek and Ollama

Ollama Installation Guide

Once your VPS environment is set up, you can install Ollama by running:

curl -fsSL https://ollama.com/install.sh | sh

To confirm that the installation was successful, check the version:

ollama --version

DeepSeek Setup with Ollama

Take advantage of the NVMe storage speeds in your VPS by setting up the DeepSeek model for production. Start by downloading the model:

ollama pull deepseek-r1:7b

Keep in mind that this model requires 35GB of storage, so ensure you have enough space and a stable internet connection during the download.

You can test the setup with a simple command:

ollama run deepseek-r1:7b "Explain quantum computing in simple terms"

Running DeepSeek as a Service

To keep DeepSeek running continuously on your VPS, you can set it up as a systemd service. Begin by creating a new service file:

sudo nano /etc/systemd/system/deepseek.service

Add the following configuration to the file:

[Unit] Description=DeepSeek Ollama Service After=network.target [Service] ExecStart=/usr/local/bin/ollama run deepseek-r1:70b Restart=always User=your_username [Install] WantedBy=multi-user.target

Next, enable and start the service with these commands:

sudo systemctl daemon-reload sudo systemctl enable deepseek.service sudo systemctl start deepseek.service

This setup ensures DeepSeek operates continuously, taking full advantage of your VPS uptime.

To monitor the service and resource usage, use these commands:

sudo systemctl status deepseek.service journalctl -u deepseek.service

For a closer look at system performance, tools like htop can also be helpful.

Performance and Problem Solving

Speed and Resource Management

Once DeepSeek is deployed as a service, keep an eye on resource usage with tools like htop and iotop to spot any bottlenecks. Here are some ways to optimize performance:

| Resource | Optimization Strategy | Expected Impact |

|---|---|---|

| CPU | Enable multi-threading and set process priorities | Up to 30% faster inference |

| RAM | Allocate at least 16GB and configure swap space | Avoids out-of-memory errors |

| Storage | Use NVMe SSDs for storing models | 3-4x faster model loading |

| Network | Ensure high bandwidth and low latency | Shorter response times |

For better throughput, consider using response caching for frequently requested queries. Performance tests show that this can cut response times by up to 40% for common queries [2].

Common Problems and Solutions

Running DeepSeek on a VPS can bring up a few challenges. Here’s how to handle the most common ones:

Resource Exhaustion

Use cgroups to monitor and limit CPU and memory usage for the Ollama process. If resource usage remains high, you may need to either upgrade your VPS or tweak DeepSeek’s settings to use fewer resources.

Network Connectivity

If you face connection problems, check your firewall rules and ensure proper port forwarding. Use nethogs to track network usage by process and pinpoint any bandwidth issues.

Model Loading Errors

If the model fails to load:

- Make sure there’s enough disk space.

- Verify the model file’s integrity.

- Update Ollama to the latest version.

- Clear the model cache if needed.

Security Setup

To secure Ollama’s API endpoints, use TLS encryption and set up role-based access control for DeepSeek queries. Enable API key authentication through Ollama for managing access securely.

For sensitive data, activate disk encryption. Use centralized logging to keep track of security events. Employ intrusion detection tools to monitor activity and perform regular security audits with vulnerability scanners.

Summary and Resources

Setup Checklist

Once you’ve completed the deployment, go through this checklist to confirm that everything is set up correctly:

| Stage | Key Requirements | Verification Steps |

|---|---|---|

| VPS Setup | 16GB RAM, 4 CPU cores, 12GB storage | – |

| Base Installation | Ubuntu 24.04 LTS | – |

| Ollama Setup | Latest version | Check with ollama --version |

| DeepSeek Model | Ensure enough storage space | – |

| Security | Firewall, SSL certificates (configured in Security Setup) | Test using ufw status |

Ongoing Maintenance Support

For configuration help and API details, refer to the Ollama documentation portal at https://ollama.com/docs [1]. If you have questions about specific models, the DeepSeek GitHub repository is a great resource for troubleshooting guides and community discussions [3].

Here are the main support channels to keep in mind:

- Ollama documentation: https://ollama.com/docs

- DeepSeek GitHub repository

- VPS provider security guidelines

If you encounter installation issues, check out the issues section on the Ollama GitHub repository for potential solutions [4].